On the December 1, 2021, Amazon DynamoDB announced the new Amazon DynamoDB Standard-Infrequent Access (DynamoDB Standard-IA) table class, which helps you reduce your DynamoDB costs by up to 60 percent for tables that store infrequently accessed data.

The DynamoDB Standard-IA table class is ideal for use cases that require long-term storage of data that is infrequently accessed, such as application logs, old social media posts, e-commerce order history, and past gaming achievements.

Benefits ✨

| Optimize for cost | Same performance, durability, and data availability | No management overhead |

|---|---|---|

| Optimize the costs of your DynamoDB workloads based on your tables’ storage requirements and data access patterns. | DynamoDB Standard-IA tables offer the same performance, durability, and availability as DynamoDB Standard tables. | Manage table classes using the AWS Management Console, AWS CloudFormation, or the AWS CLI/SDK. |

Move your data from Standard to Standard-IA table class

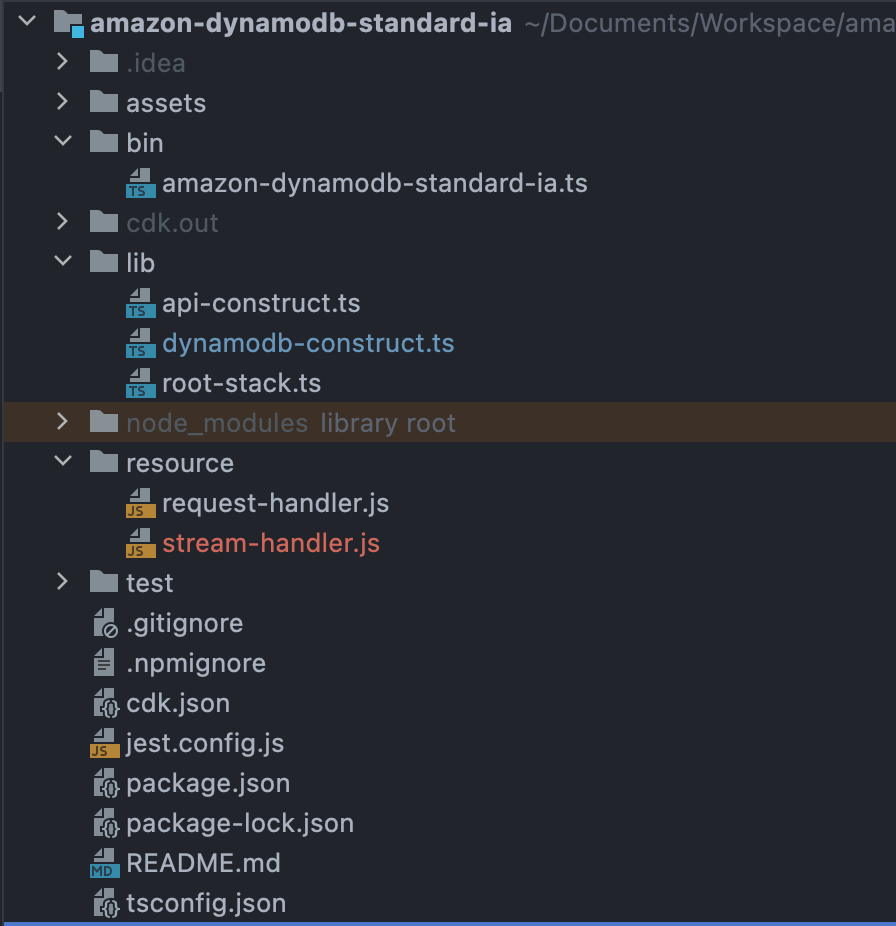

In this blog post I show you an example on how to do it with:

- 2 tables (Standard and Standard-IA)

- Amazon DynamoDB Streams

- Amazon DynamoDB TTL

- Amazon API Gateway

- AWS Lambda

- AWS Cloud Development Kit

All the source code is available on Github

What are we going to build ? 🚧

Using the AWS Cloud Development Kit, we will build an Amazon API gateway to trigger a Lambda function and insert new items into our first table (Standard class).

This item will be registered with a TTL. Once this TTL is reached, DynamoDB will delete the item and trigger a DynamoDB Stream. A Lambda function in charge of processing the DynamoDB Stream will place the deleted item in our second table of class Standard-IA.

Create a new AWS CDK project

npx cdk init app --language=typescript

Create a Construct for our API Gateway

In the snippet of code below we create a Lambda function with the required IAM Policy Statement, and we attach the IAM Policy as an inline policy to the function's role. After, we create an API Gateway and integrate our Lambda function to the API Gateway method.

lib/api-construct.ts

import * as lambda from "aws-cdk-lib/aws-lambda"

import * as apiGateway from "aws-cdk-lib/aws-apigateway"

import * as iam from "aws-cdk-lib/aws-iam"

import {Construct} from "constructs";

interface Props {

tableName: string;

tableArn: string;

}

export class API extends Construct {

constructor(scope: Construct, id: string, props: Props) {

super(scope, id);

// Permissions for our Lambda function to putItem in DynamoDB table

const dynamoDBPolicy = new iam.PolicyStatement({

actions: ['dynamodb:PutItem'],

resources: [props.tableArn],

});

const requestHandler = new lambda.Function(this, 'RequestHandler', {

code: lambda.Code.fromAsset('resource'),

runtime: lambda.Runtime.NODEJS_14_X,

handler: 'request-handler.put',

environment: {

TABLE_NAME: props.tableName

}

});

requestHandler.role?.attachInlinePolicy(new iam.Policy(this, 'putItem', {

statements: [dynamoDBPolicy]

}))

requestHandler.role?.addManagedPolicy(

iam.ManagedPolicy.fromAwsManagedPolicyName(

'service-role/AWSLambdaBasicExecutionRole',

),

);

const api = new apiGateway.LambdaRestApi(this, 'DynamoDB', {

handler: requestHandler,

proxy: false

});

const apiIntegration = new apiGateway.LambdaIntegration(requestHandler);

const items = api.root.addResource('items')

const itemModel = new apiGateway.Model(this, "model-validator", {

restApi: api,

contentType: "application/json",

description: "To validate the request body",

modelName: "itemModel",

schema: {

type: apiGateway.JsonSchemaType.OBJECT,

required: ["details"],

properties: {

details: {

type: apiGateway.JsonSchemaType.STRING

}

}

},

});

items.addMethod('POST', apiIntegration, {

requestValidator: new apiGateway.RequestValidator(

this,

"body-validator",

{

restApi: api,

requestValidatorName: "body-validator",

validateRequestBody: true,

}

),

requestModels: {

"application/json": itemModel,

}

})

}

}

Request handler source code

Now we will write our Lambda function logic to handle POST requests and insert new items into our DynamoDB table.

In the previous sample we set the function code path to resource directory.

const requestHandler = new lambda.Function(this, 'RequestHandler', {

code: lambda.Code.fromAsset('resource'),

runtime: lambda.Runtime.NODEJS_14_X,

handler: 'request-handler.put',

environment: {

TABLE_NAME: props.tableName

}

});

resource/request-handler.js

const crypto = require('crypto')

const AWS = require('aws-sdk')

const dynamo = new AWS.DynamoDB.DocumentClient();

const REQUIRED_ENVS = ["TABLE_NAME"]

const SEC_IN_A_MINUTE = 60;

const response = (statusCode, body) => {

return {

statusCode,

headers: {

"content-type": "application/json"

},

body

}

}

const put = async (event) => {

const missing = REQUIRED_ENVS.filter((env) => !process.env[env])

if (missing.length)

throw new Error(`Missing environment variables: ${missing.join(', ')}`);

console.log(`EVENT: ${JSON.stringify(event, null, 2)}`)

try {

const {details} = JSON.parse(event.body);

const uuid = crypto.randomBytes(8).toString('hex')

const date = new Date();

date.setSeconds(date.getSeconds() + SEC_IN_A_MINUTE);

const ttl = Math.floor(date / 1000);

const item = {

id: uuid,

details,

ttl

}

const params = {

TableName: process.env.TABLE_NAME,

Item: item

};

await dynamo.put(params).promise();

return response(200, JSON.stringify(item, null, 2))

} catch (e) {

return response(500, e.toString())

}

}

module.exports = {

put

}

DynamoDB table Construct

Now, we are going to create our DynamoDB Construct. Nothing special here, we just specify some attributes and more particularly the time to live attribute

lib/dynamodb-construct.ts

import {Construct} from "constructs";

import * as dynamodb from "aws-cdk-lib/aws-dynamodb";

import {TableProps} from "aws-cdk-lib/aws-dynamodb";

interface Props {

tableClass?: string // tableClass: dynamodb.TableClass.STANDARD_INFREQUENT_ACCESS,

}

export class DynamoDBTable extends Construct {

public readonly table: dynamodb.Table;

constructor(scope: Construct, id: string, props?: Props) {

super(scope, id);

this.table = new dynamodb.Table(this, 'Table', {

partitionKey: { name: 'id', type: dynamodb.AttributeType.STRING },

billingMode: dynamodb.BillingMode.PAY_PER_REQUEST,

stream: dynamodb.StreamViewType.NEW_AND_OLD_IMAGES,

timeToLiveAttribute: "ttl",

...props

});

}

}

DynamoDB Stream handler Construct

Before bringing everything together we will create an EventSourceMapping resource. It creates a mapping between an event source and an AWS Lambda function. Lambda reads items from the event source and triggers the function.

This Construct is very similar to the DynamoDB Table Construct. We added to it the dynamoDBStreamPolicy and the EventSourceMapping.

lib/dynamodb-stream-construct.ts

import {Construct} from "constructs";

import * as iam from "aws-cdk-lib/aws-iam";

import * as lambda from "aws-cdk-lib/aws-lambda";

interface Props {

tableName: string;

tableArn: string;

tableStreamArn?: string;

handler: string;

resource?: string;

}

export class DynamoDBStream extends Construct {

constructor(scope: Construct, id: string, props: Props) {

super(scope, id);

const dynamoDBPolicy = new iam.PolicyStatement({

actions: [

"dynamodb:PutItem",

],

resources: [props.tableArn],

});

const dynamoDBStreamPolicy = new iam.PolicyStatement({

actions: [

"dynamodb:DescribeStream",

"dynamodb:GetRecords",

"dynamodb:GetShardIterator",

"dynamodb:ListStreams",

],

resources: [props.tableStreamArn || "*"],

});

const fn = new lambda.Function(this, 'RequestHandler', {

code: lambda.Code.fromAsset(props?.resource || 'resource'),

runtime: lambda.Runtime.NODEJS_14_X,

handler: props.handler,

environment: {

TABLE_NAME: props.tableName

}

});

fn.role?.attachInlinePolicy(new iam.Policy(this, 'putItem', {

statements: [dynamoDBPolicy, dynamoDBStreamPolicy]

}))

fn.role?.addManagedPolicy(

iam.ManagedPolicy.fromAwsManagedPolicyName(

'service-role/AWSLambdaBasicExecutionRole',

),

);

const source = new lambda.EventSourceMapping(this, 'EventSourceMapping', {

target: fn,

eventSourceArn: props.tableStreamArn,

startingPosition: lambda.StartingPosition.TRIM_HORIZON,

batchSize: 1,

});

const cfnSource = source.node.defaultChild as lambda.CfnEventSourceMapping;

cfnSource.addPropertyOverride('FilterCriteria', {

Filters: [

{

Pattern: `{ \"eventName\": [\"REMOVE\"] }`,

},

],

});

}

}

Stream handler source code

If you noticed, in the previous snippet, we filter the event source, so there's no need to do it inside our Lambda function:

cfnSource.addPropertyOverride('FilterCriteria', {

Filters: [

{

Pattern: `{ \"eventName\": [\"REMOVE\"] }`,

},

],

});

resource/stream-handler.js

const crypto = require('crypto')

const AWS = require('aws-sdk')

const dynamo = new AWS.DynamoDB.DocumentClient();

const REQUIRED_ENVS = ["TABLE_NAME"]

const put = async (event) => {

const missing = REQUIRED_ENVS.filter((env) => !process.env[env])

if (missing.length)

throw new Error(`Missing environment variables: ${missing.join(', ')}`);

console.log(`EVENT: ${JSON.stringify(event, null, 2)}`)

try {

const [{dynamodb: {OldImage: deletedItem}}] = event.Records;

const uuid = crypto.randomBytes(8).toString('hex')

const item = {

id: uuid,

archive: deletedItem

}

const params = {

TableName: process.env.TABLE_NAME,

Item: item

};

return dynamo.put(params).promise();

} catch (e) {

throw new Error(e);

}

}

module.exports = {

put

}

Wrap everything 🎁

Now it's time to write our Root stack. We import all our custom Constructs and instantiate them.

Also pay attention to the Construct DynamoDBStream, we pass it the tableStreamArn and tableArn properties.

lib/root-stak.ts

import * as dynamodb from "aws-cdk-lib/aws-dynamodb"

import {Construct} from 'constructs';

import {Stack, StackProps} from 'aws-cdk-lib';

import {API} from "./api-construct";

import {DynamoDBTable} from "./dynamodb-construct";

import {DynamoDBStream} from "./dynamodb-stream-construct";

export class RootStack extends Stack {

constructor(scope: Construct, id: string, props?: StackProps) {

super(scope, id, props);

const standardClassTable = new DynamoDBTable(this, 'DynamoDBStandard')

const standardIaClassTable = new DynamoDBTable(this, 'DynamoDBStandardIA', {

tableClass: dynamodb.TableClass.STANDARD_INFREQUENT_ACCESS,

})

new API(this, 'API', {

tableName: standardClassTable.table.tableName, // 1st table name

tableArn: standardClassTable.table.tableArn, // 1st table ARN

});

new DynamoDBStream(this, 'StreamHandler', {

tableName: standardIaClassTable.table.tableName, // 2nd table name

tableArn: standardIaClassTable.table.tableArn, // 2nd table ARN

tableStreamArn: standardClassTable.table.tableStreamArn, // 1st table stream ARN

handler: 'stream-handler.put'

});

}

}

bin/amazon-dynamodb-standard-ia.ts

#!/usr/bin/env node

import 'source-map-support/register';

import * as cdk from 'aws-cdk-lib';

import { RootStack } from '../lib/root-stack';

const app = new cdk.App();

new RootStack(app, 'AmazonDynamodbStandardIaStack', {

stackName: 'DynamoDB-Standard-class-to-DynamoDB-Standard-IA-class-example'

});

Time to deploy 🚀

Now it's time to deploy our stack!

npx cdk deploy

Conclusion

In this blog post you have seen how to use DynamoDB Streams and DynamoDB TTL to migrate your data from a Standard class table to a Standard-IA class table.

You can find all the source code on GitHub in this repository.

Please fell free to share and follow me on Twitter (@nathanagez) to stay in touch!